Essay

Hyperland, Intermedia, and the Web That Never Was

10/27/2020

Claire L. Evans

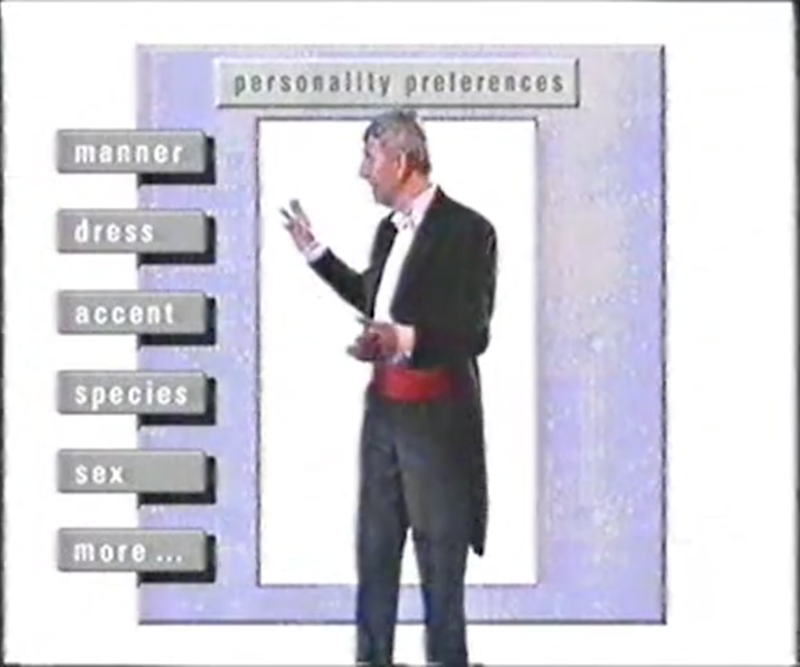

In 1990, the science fiction writer Douglas Adams produced a “fantasy documentary” for the BBC called Hyperland. It’s a magnificent paleo-futuristic artifact, rich in sideways predictions about the technologies of tomorrow. The film opens with Adams asleep in front of his television. In the dream sequence that follows, he grabs the set, lugs it outside, and throws it onto a mountain of garbage. A disembodied voice flickers as the screen tumbles out of sight. “Have you had it with linear media?” it asks. “Are you tired of television that just happens to you?” Adams searches around for the source. A butler materializes, cummerbund and all. He introduces himself as Tom, a “software agent.”

“Agent?” Adams balks. “Does that mean you’ll take fifteen percent?”1

No, Tom’s not that kind of agent. He’s more like a silicon valet, a guide through “Hyperland,” a new world in virtual space. Hyperland is a hypermedia utopia, a wealth of information interconnected by links and clickable moving icons, predecessors to the animated GIF that Tom charmingly calls “micons.” By clicking links and “micons,” Hyperland’s visitors can take long informational dérives, much as we might browse the Web today. Tom demonstrates: from a live camera feed of the Atlantic ocean, he encourages Adams to click on a link to Coleridge’s "Rime of the Ancient Mariner," which then leads Adams to Xanadu, Kubla Khan’s stately pleasure dome, and then to a hypertext software program of the same name, designed by the software architect and techno-dreamer Ted Nelson, which would allow writers like Adams to create nonlinear documents where nothing is ever deleted, every idea can be traced back to its source, and everything is “intertwingled.”

Hyperland aired on the BBC a full year before the World Wide Web. It is a prophecy waylaid in time: the technology it predicts is not the Web. It’s what William Gibson might call a “stub,” evidence of a dead node in the timeline, a three-point turn where history took a pause and backed out before heading elsewhere. The cyberspace Adams imagines is not even online. Rather, it’s something that never properly came to exist: an open framework for moving through a body of knowledge, bending to the curiosities of a trailblazing traveler.

Hyperland might have seemed highly speculative to those who caught it on television, but it didn’t appear unbidden. Adams was a computer geek—he claimed to own the first Apple Macintosh in England—2and based his speculative documentary on cutting-edge research of the time.3 He drew inspiration from the hypertext systems being developed in the pre-Web world, themselves inspired by a prophetic 1945 article by the American scientist Vannevar Bush. Bush proposed a tabletop information-viewing machine—the “Memex”—to serve as an “enlarged intimate supplement” to human memory, making compressed microfiches of documents, books, and records available at the touch of a key. By the early 1990s, this was somewhat feasible, and Hyperland visits with several would-be Memexes: Ted Nelson’s Xanadu, an interactive film about DNA produced by the Apple Multimedia Lab, a children’s novel published in Hypercard, an interactive version of Beethoven’s 9th Symphony, and the artist Robert Abel’s hypermedia Guernica, which linked discrete images within Picasso’s famous painting to first-person accounts of the bombing it depicts.

Projects like these were everywhere in the late ‘80s and early ‘90s—showy examples of what might happen at the vertex of information storage and retrieval, with the help of intuitive graphical user interfaces. Hypertext itself dates back to the late 1960s. The engineer Douglas Englebart premiered oNLine System, his take on the Memex, in ’68, with a presentation generally known in the literature as “The Mother of All Demos.” In the demo, Englebart used a mouse to move around tiled windows on a screen, highlighting text and collaborating in real-time with a colleague back at Stanford. This earned him a standing ovation, but it wasn’t entirely sui generis either; Ted Nelson and a friend from Swarthmore, Andy van Dam, had by then already been developing hypertext systems with Van Dam’s Computer Science students at Brown University. The first system to emerge from this effort, Hypertext Editing System, or HES, was an “unfunded bootleg experiment” borrowing ideas from Nelson’s Xanadu.4 It allowed for cutting, pasting, and rearranging text in a nonlinear fashion, but it was largely intended to prepare documents for print, which irritated Nelson, who believed that hypertext, by definition, went beyond the page. HES was eventually used by NASA to produce documentation for the Apollo rockets, although Nelson left Brown in 1968—by all accounts, in a huff. “Ted was a philosopher,” van Dam explained in 2019. “he wanted to keep hypertext pure.”5

Left to his own devices, van Dam kept going, and the next system he developed with his students marked an important shift “across to a different technical phylum—from the personal, idiosyncratic memory aid to the public archive.”6 FRESS, the File Retrieval and Editing System,7 had less of a relationship to print; FRESS terminals were networked to one another, with the University’s multimillion dollar IBM mainframe serving as mediator.

In a rare early instance of mainframe computing being applied to the humanities, students at Brown used FRESS to read poetry. They scrolled through and annotated poems with “creative graffiti,” linking sections to one another wherever thematic associations emerged. FRESS awakened the first online scholarly community: in class discussions held within the system, shy students grew garrulous, and the FRESS class produced three times more writing than a computer-free control group,8 a volume significant enough for their professors to wonder if perhaps they’d only made more work for themselves. Undoubtedly the students felt empowered by FRESS’ most lasting contribution to modern computing: the undo function.9

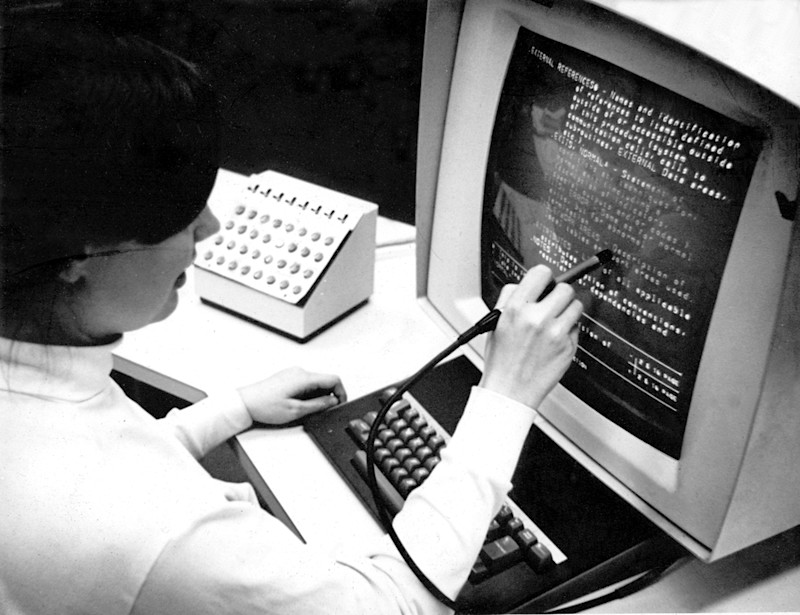

Neither HES nor FRESS would be recognizable to a modern Web user. Students navigated FRESS by holding a light pen to the screen while tapping a foot pedal, more “point and kick” than “point and click,” as one historian has noted9. Another decade would pass before Brown produced something we might be able to use intuitively today, by navigating images and text with mouse and cursor. The designers of this next-generation hypertext system were young graduates of the computer science program built by Andy van Dam. “They used to call us the children's crusade,” laughs one of their brightest, Nicole Yankelovich.10

In 1983, Nicole became the first employee of the Institute for Research in Information and Scholarship, IRIS, a research group at Brown funded by a sizable grant from IBM. “Everybody was within two years of my age, above or below. We were really young. And all of these guys from IBM, with the suits and the grey hair—to them we were impossibly young.”

IRIS’ biggest project was the creation of a hypertext software system called Intermedia, a next-level evolution of HES and FRESS designed to be a fully-integrated “scholar’s workstation” for faculty and students alike. “The vision of Intermedia,” explains Karen Catlin, one of Nicole’s colleagues, “was that the professors would be using the same tool that they would be using to teach the students.”11 This meant the system had to be robust enough to support serious scholarship while still remaining user-friendly to undergraduates who had never so much as touched a mouse.

IRIS designed their software with this brief in mind. In the process, they worked closely with faculty and students to identify their needs. A biology professor wanted a way to annotate student lab papers, while an English professor hoped to provide context for the authors he assigned in his first-year survey course. Freshmen couldn’t be expected to know the political conditions of Victorian England, he reasoned, or the daisy-chain of literary influences leading to Ulysses, but understanding those things would enrich both Dickens and Joyce.12 As they hashed out these requirements with faculty, the IRIS team was shadowed by a documentary film crew and a group of ethnographers from Brown’s anthropology department, giving them nearly constant opportunity for reflection.

From observing teaching in action, the IRIS team learned that Ivy League professors don’t handhold; “university instructors do not ‘tutor’ students nearly as much as they try to guide them through bodies of material,” they wrote in an early paper. Rather, they help students “analyze and synthesize the material,” encouraging “them to make connections and discover meaningful relationships”13 on their own. Fortunately, this kind of association-building is exactly what hypertext makes possible.

Today, we tend to think of applications on our computers and phones as single-use tools. I use Pages to compose documents, Photoshop to adjust images, Safari to browse the Web, and Are.na to compile and organize research materials. Intermedia proposed that all those activities could be connected. As its name suggests, Intermedia was plural: it included InterText, a word processor, InterDraw, a drawing tool, Interpix, for looking at scanned images, InterSpect, a three-dimensional object viewer, and InterVal, a timeline editor. Together, these five applications formed an information environment, a flexible housing for whatever corpus of documents a scholar might want to consult. Where one might load up timelines of English lit and engravings of Dickensian England, another might use Intermedia to examine 3D models of plant cells alongside scientific papers on photosynthesis. During a typical session, all five of the applications would likely be open at once, in a messy, unstructured constellation of windows.

On a system level, some of this is still possible. I can add Web links to a Word document, for example, and my Mail program is clever enough to identify names and addresses in the messages I receive, adding them to my Contacts or my Calendar. As practical as these connections are, they’re only fragments of what Intermedia made possible. Five years before the arrival of the World Wide Web, Intermedia empowered its users to create hyperlinks between their own documents. Viewed as a whole, these links formed an Intermedia “Web.” Distinct from the Web as we know it today, an Intermedia Web was a map of paths. Because information about the links made were stored in a separate database from the documents themselves, the program could support multiple perspectives on the same material. Rather than a single shared Web, a collectively-authored, globally-distributed tangle of hypermedia, Intermedia had as many Webs as it had users—each a bird’s eye view of every link traveled, and where it led.

Nicole gives me a quick-and-dirty example. “You could think of it like a filter,” she explains. Say we have a heap of documents about English literature: poems, historical timelines, portraits, essays, reviews. “I might be interested in the use of love in poetry,” she says, so “I would make all these links about love. But you might be interested in battles, so you could take that same set of documents and make links having to do with battles. Your links and my links don't have to coexist.”14 Each Web would serve to illustrate a different, independent path through the same material. But if I, lover of battles, wanted to consider the gentler side of things, I’d need only open Nicole’s Web. Through her eyes, I’d discover an entirely new view of the material.

In this way, we could share, and even merge, our perspectives. Traveling Nicole’s love Web, a memory of something from my battle Web—an instance of courtly love, perhaps, in Chaucer—might emerge. In that case, I could easily contribute a new link by selecting the point of interest, clicking Start Link, and connecting it to a destination. Intermedia was the first system to allow such granular specificity in its links. “If you look at the systems that came before, they linked to entire pages,” Nicole says. “You link[ed] one document to another document. One of the things that we pioneered was this idea of linking a point to a point rather than a page to a page.”1 If this sounds familiar, it’s because Intermedia’s “anchor links” made their way into HTML, the language of the World Wide Web. Learning to write HTML, the first thing anyone memorizes is the specification <a href=“”>, which is how the location and destination of every hyperlink on the Web is marked. “What you're saying between those angle brackets, that's the anchor,” explains Nicole—it’s what the “a” in “a href” is short for. Like links on the Web, anchor links made across Intermedia’s five applications could be precise to the word or pixel.

In 1985, the students at Brown who encountered Intermedia had never seen anything like it before in their lives. The system laid a world of information at their fingertips, saved them hours at the library, and helped them work through tangles of thought. Making Webs was more flexible than drawing concept maps and brainstorms on paper. And although Intermedia was never on the Internet—its corpus of documents was inputted, with great effort, by faculty, onto University servers—its browsing and linking activity predicted the World Wide Web by half a decade.

Not that Intermedia was particularly well-understood in its time. Although it had its admirers within the close-knit hypertext community and beyond—Apple, whose own HyperCard software was released in 1987, partially sponsored IRIS and brought its researchers together with Apple teams for offsite retreats—more traditional computer scientists didn’t see its appeal, and plenty of academics found the idea of studying literature on the computer sacrilegious. “When you look back at the number of naysayers there were…” Nicole begins, before pausing. “It's hard to really fathom that now,” she continues. “There were all of these naysayers, but I don't think that any of us ever doubted that what we were doing was the future. And it turned out that it was.”

[1] https://archive.org/details/DouglasAdams-Hyperland

[2] Matthew G. Kirschenbaum, Track Changes: The Literary History of Word Processing (Cambridge: Harvard University Press, 2016), 112.

[3] https://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/303881/

[4] Belinda Barnet, Memory Machines: The Evolution of Hypertext (London: Anthem Press, 2014), 113.

[5] https://cs.brown.edu/events/halfcenturyofhypertext/

[6] Belinda Barnet, Memory Machines: The Evolution of Hypertext (London: Anthem Press, 2014), 113.

[7] “File Retrieval and Editing System” was a backronym, conceived after the fact. Initially FRESS was named after the Yiddish word “fresser,” meaning glutton—the system gobbled up the Brown mainframe’s memory capacity.

[8] James Gillies and Robert Caillau, How The Web Was Born (Oxford: Oxford University Press, 2000), 104-105.

[9] Belinda Barnet, Memory Machines: The Evolution of Hypertext (London: Anthem Press, 2014), 108.

[10] Nicole Yankelovich, interview with author via Skype, 1/9/17.

[11] Karen Catlin, interview with the author via Skype, January 16, 2017.

[12] Intermedia: From Linking to Learning, https://vimeo.com/20660897.

[13] Nicole Yankelovich, Bernard Haan and Steven Drucker, "Connections in Context: The Intermedia System." Proceedings of the Twenty-First Annual Hawaii International Conference on System Sciences. Bruce D. Shriver, (editor). January 5-8, 1988, Kailua-Kona, HA. Washington, D.C.: Computer Society Press of the IEEE. 715-724.

[14] Nicole Yankelovich, interview with author via Skype, January 9, 2017.

Claire L. Evans is a writer and musician. She is the author of Broad Band: The Untold Story of the Women Who Made The Internet, the singer and coauthor of the pop group YACHT, and the founding editor of Terraform, VICE‘s science-fiction vertical. She is the former futures editor of Motherboard, and a contributor to VICE, The Guardian, WIRED, and Aeon; previously, she was a contributor to Grantland and wrote National Geographic‘s popular culture and science blog, Universe. She is an advisor to design students at Art Center College of Design and a member of the cyberfeminist collective Deep Lab. She lives in Los Angeles.

Are.na Blog

Learn about how people use Are.na to do work and pursue personal projects through case studies, interviews, and highlights.

See MoreYou can also get our blog posts via email